Image Segmentation

- Different types of segmentation

- Semantic segmentation (every pixel is classified)

- Instance segmentation (object level classification)

- Panoptic segmentation (combination of pixel and instance)

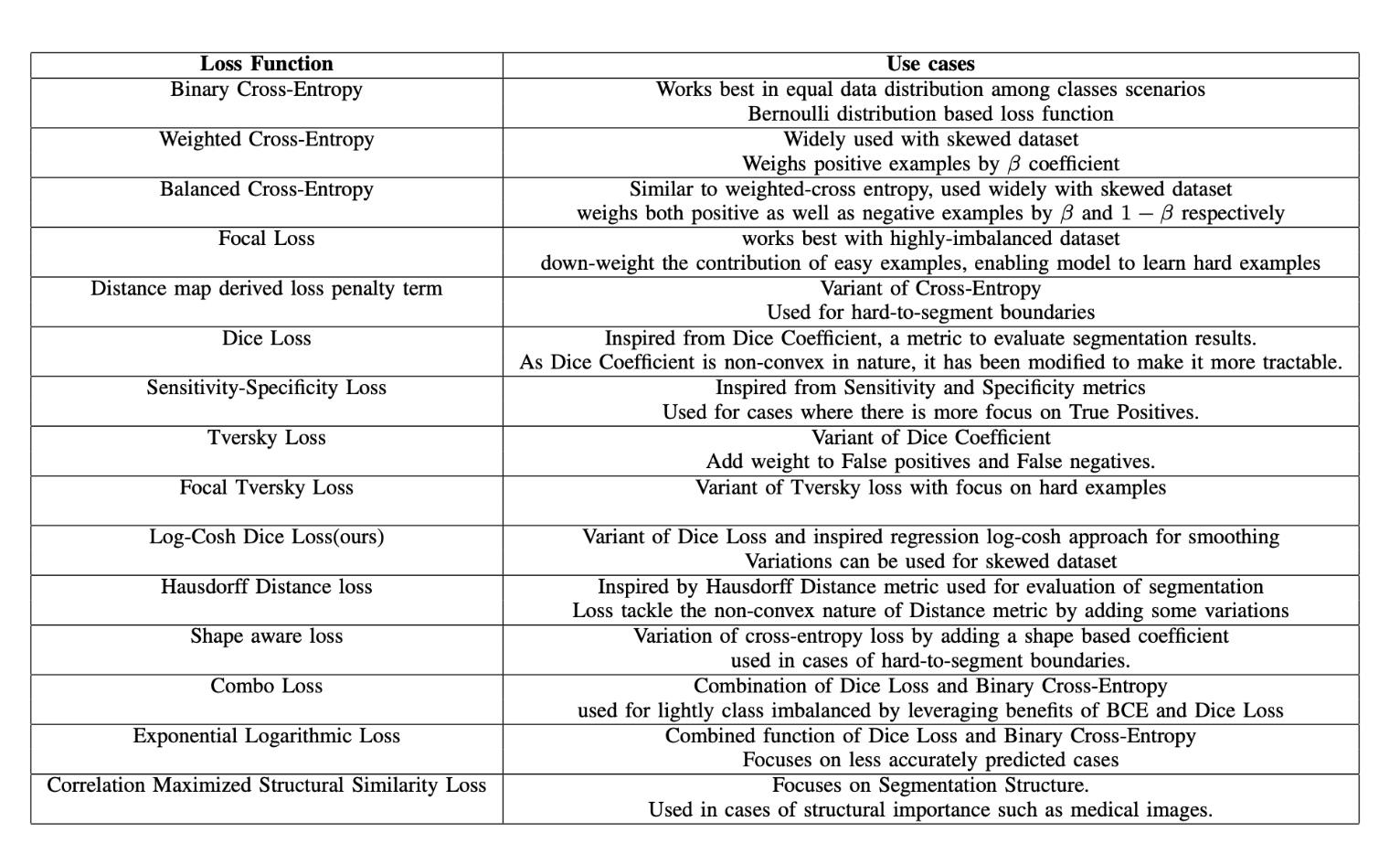

- After convolutions and pooling we will have a lot of feature maps in reduced dimensions. With the help of compressed latent space representation we can do lot of things like classification, upsampling etc

- Auto-encoders

Upsampling

- Upsampling from Latent space representation to the original size of the image

- Upsampling happens in decoder

- Upsampling is used for image generation, enhancement, mapping and more

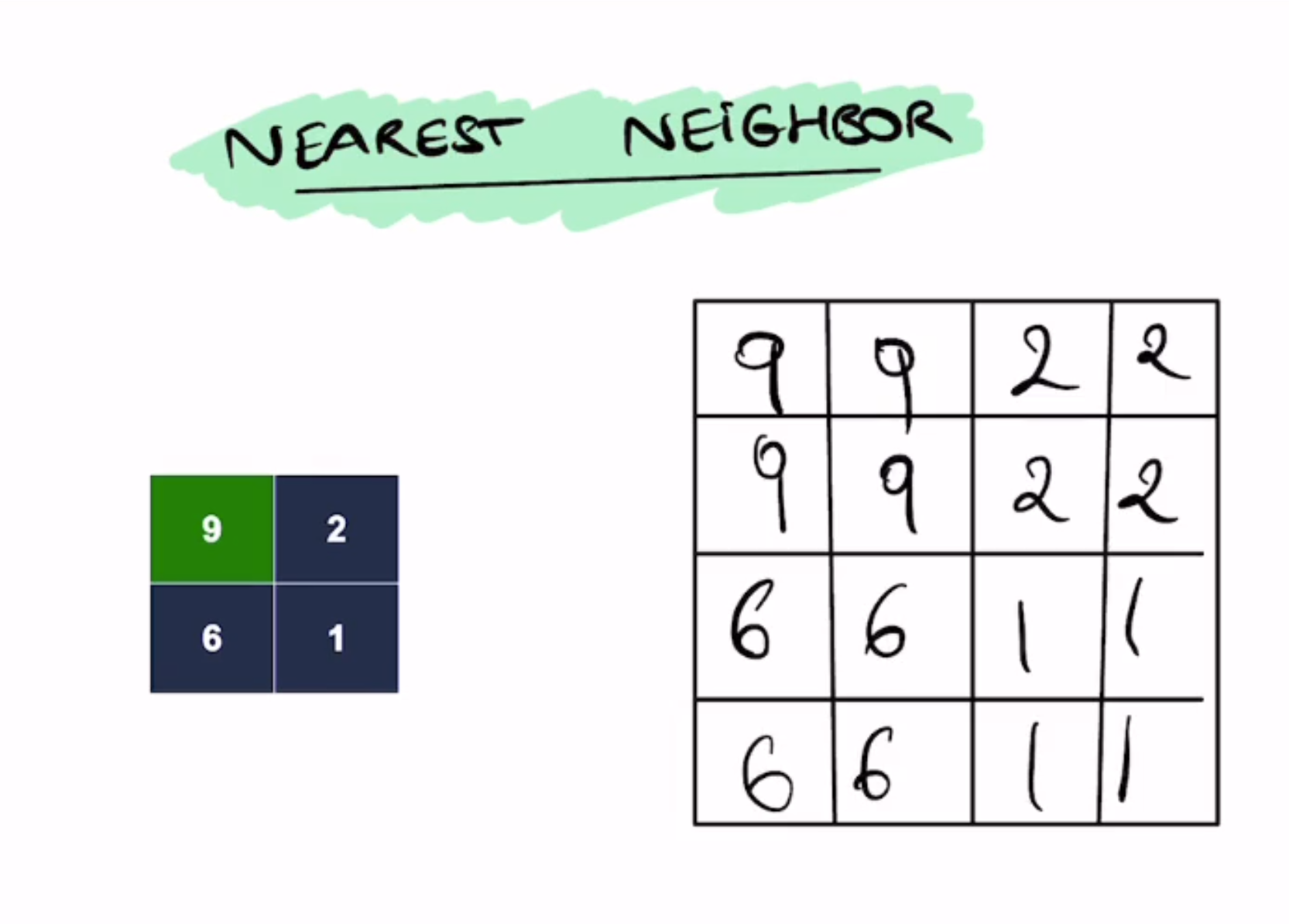

- Nearest Neighor

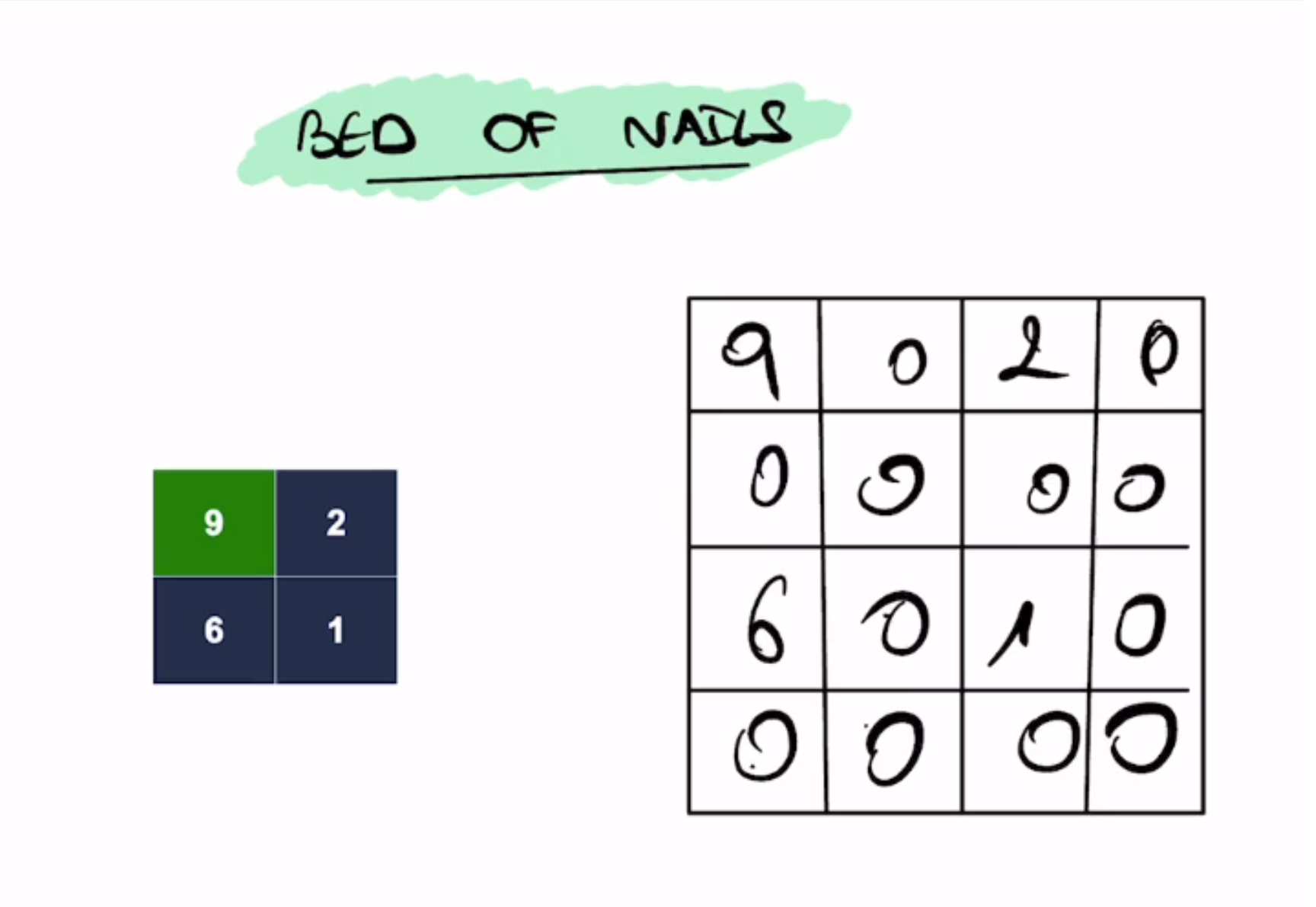

- Bed of Nails

- Bilinear upsampling

- Transposed convolutions

- Max unpooling

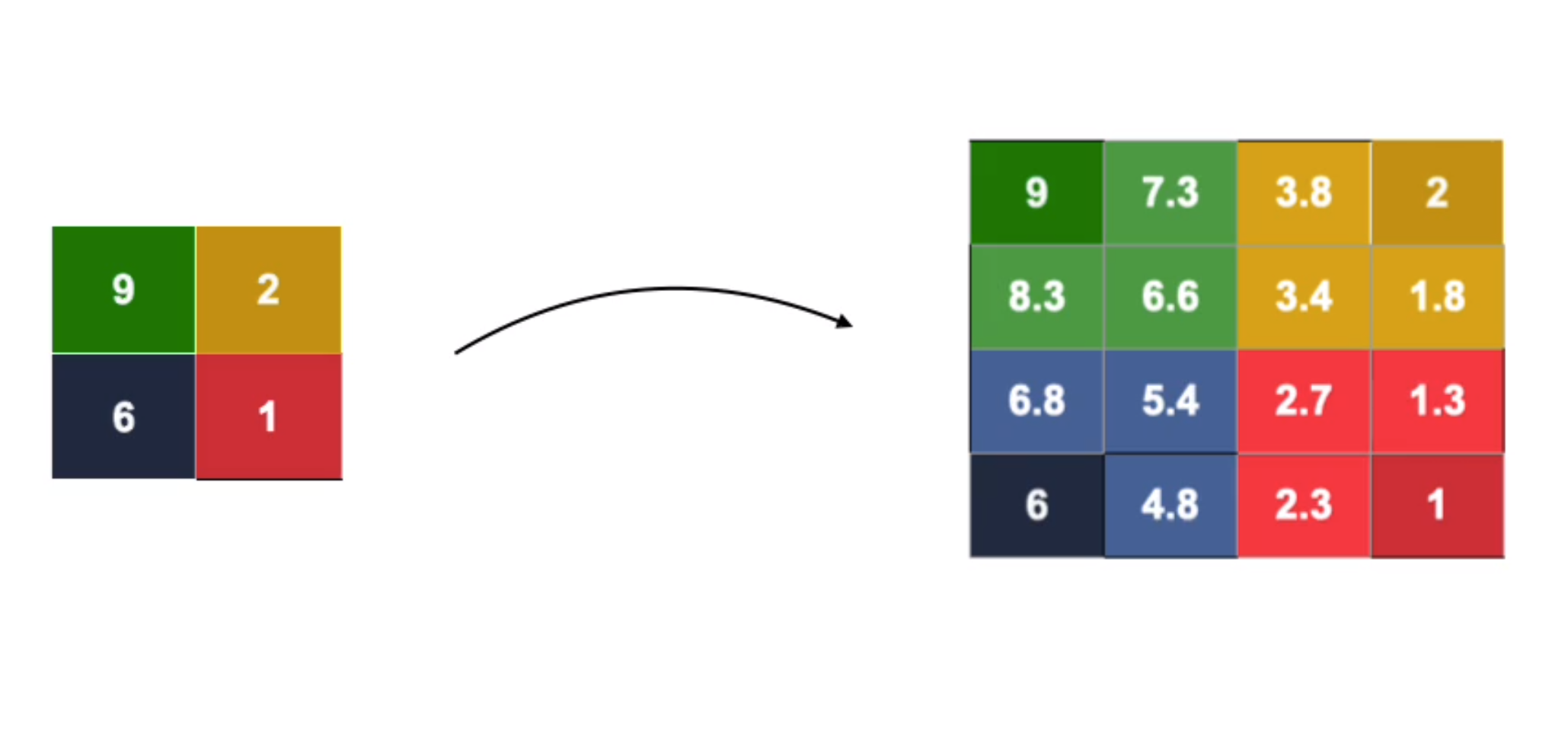

Nearest Neighbor

Bed of nails

Bilinear interpolation

- Most popular

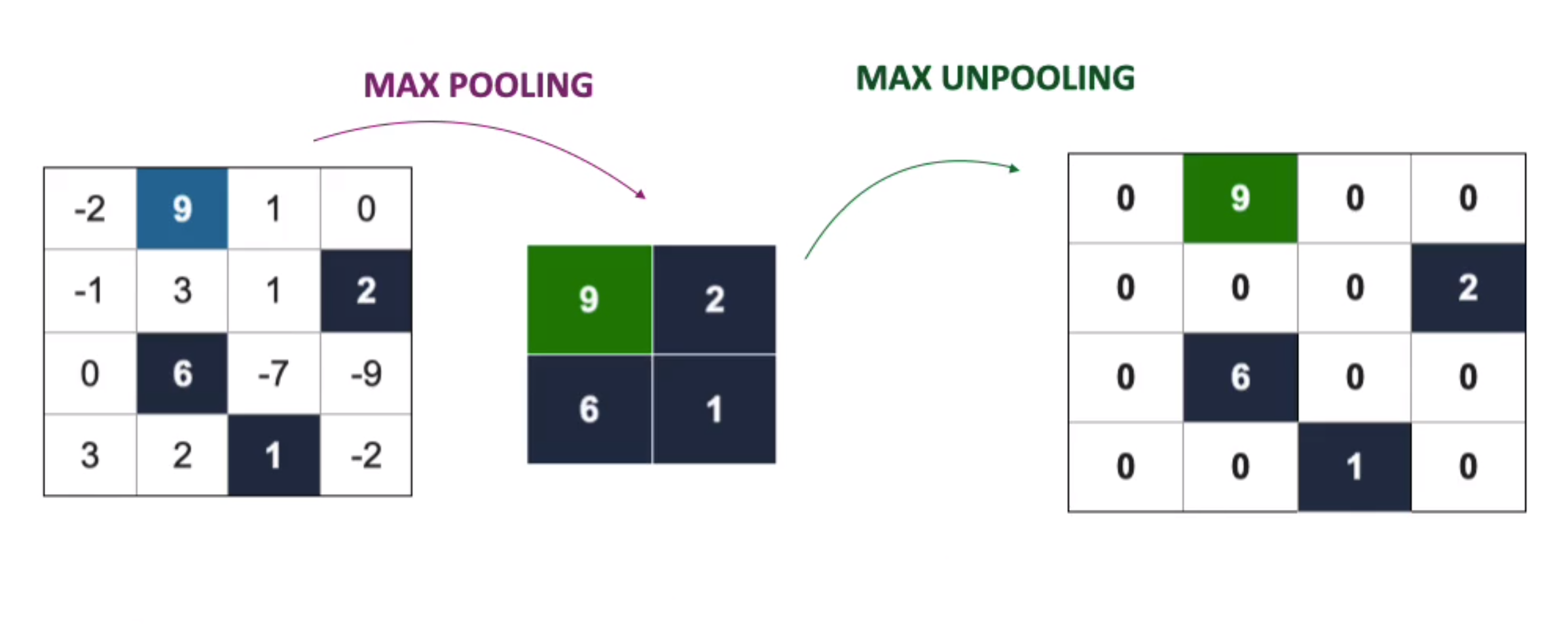

Max unpooling

- In Max unpooling we memorize the position of the pixel with max value during max pooling. During upsampling, we fill the value in the exact position of the pixel with max value

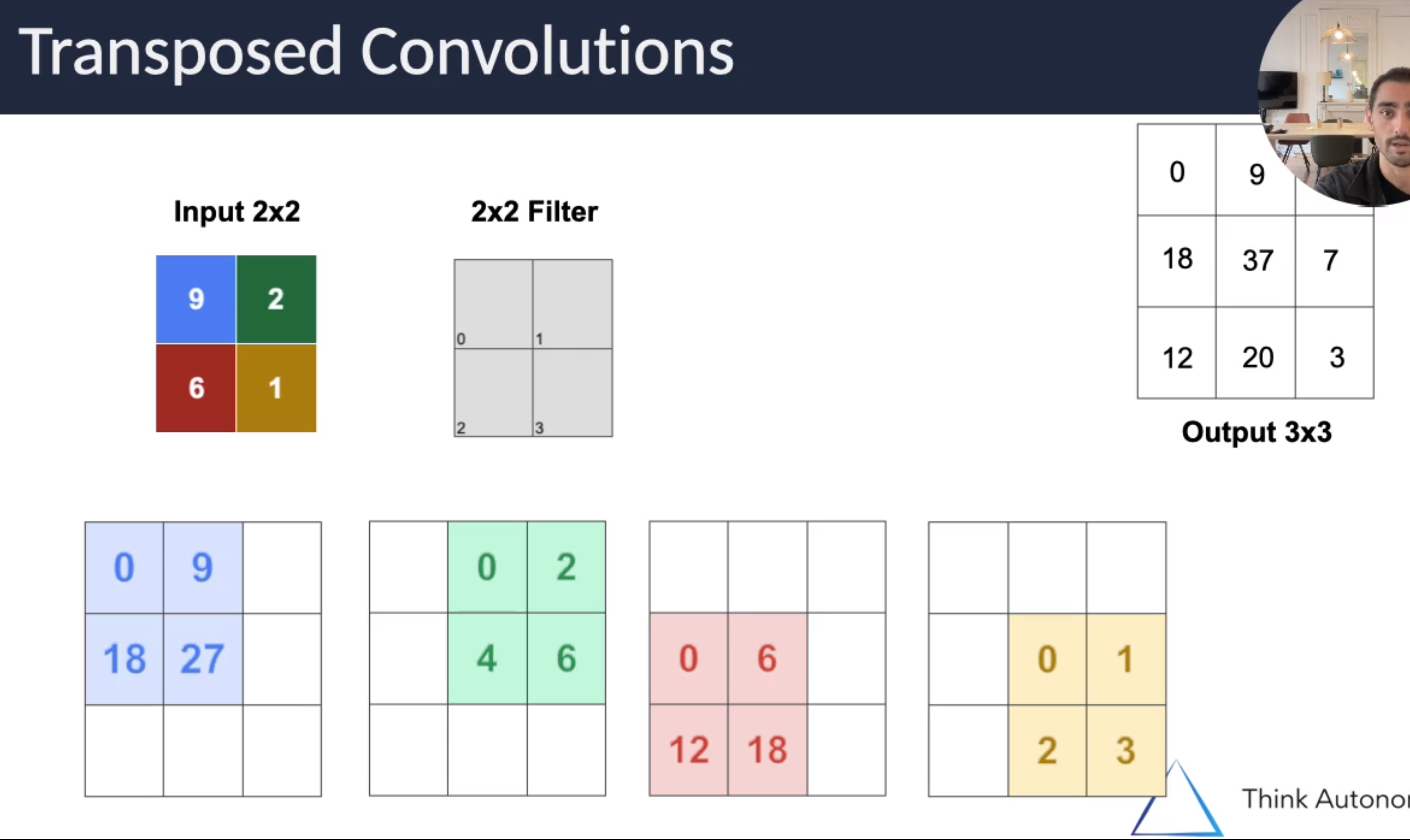

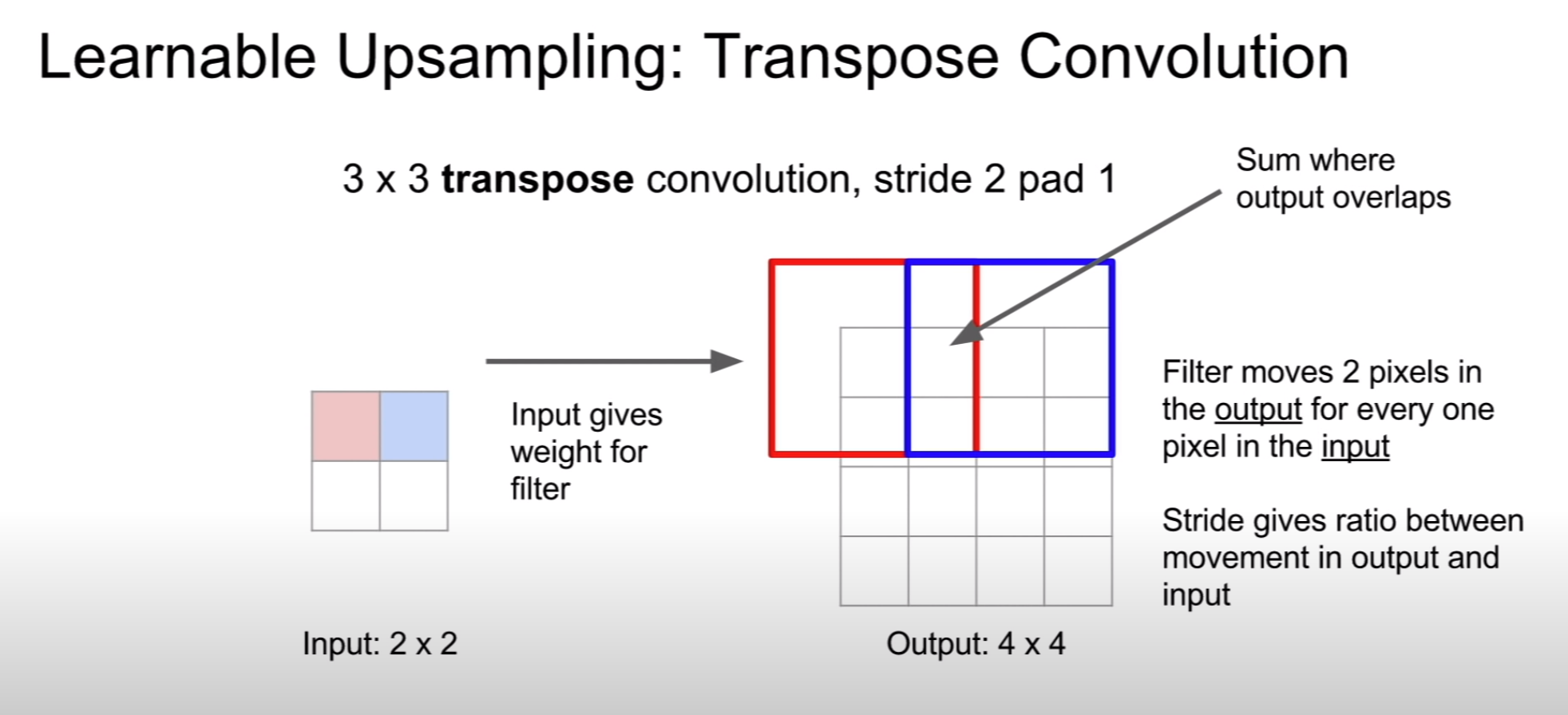

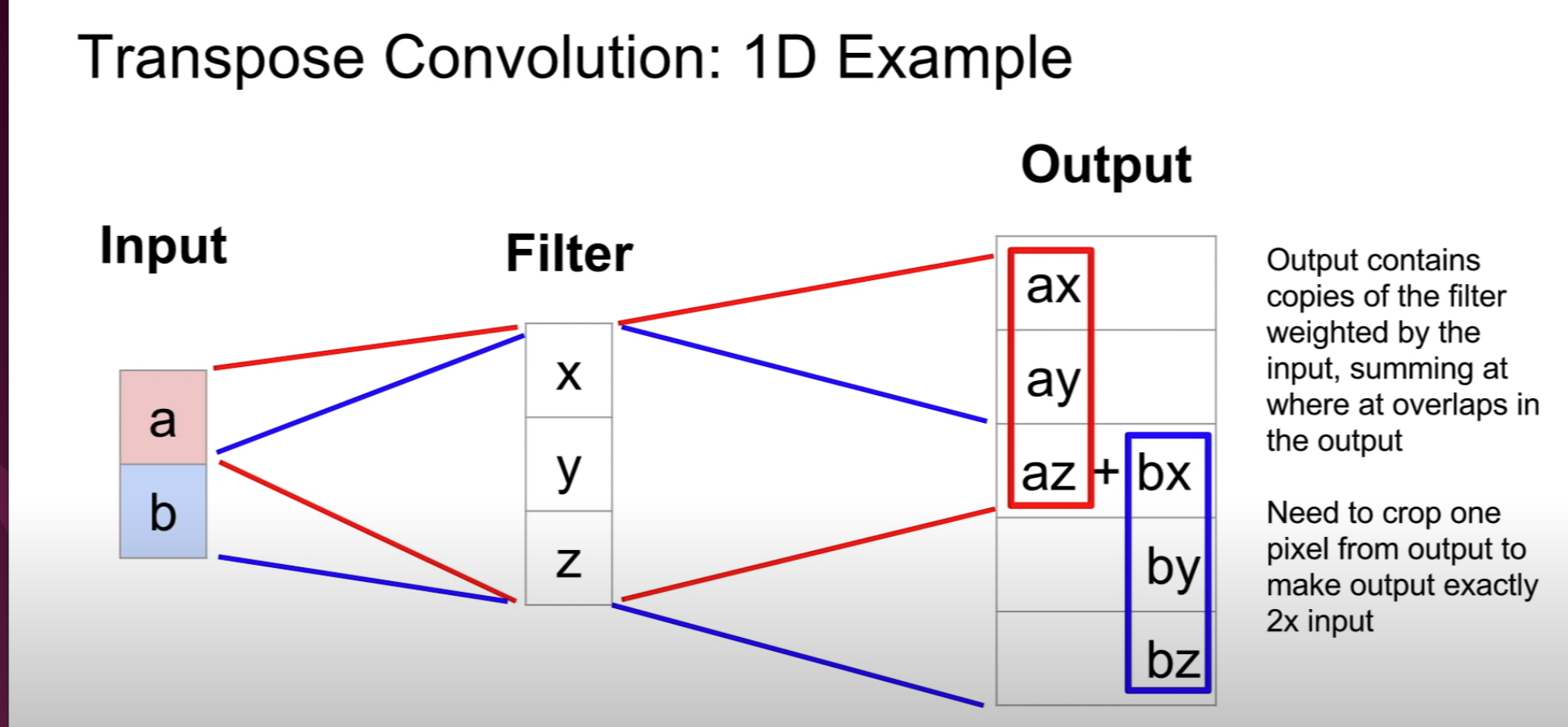

Transposed convolution

These is a learnable upsampling method

For upsampling the input provides the weight for the filter

Transposed convolutions

Learnable Transposed convolution

Example of Transpose convolution

Interpolate in pytorch

nearest_neighbour_out = F.interpolate(input, scale_factor=2, mode='nearest')

bilinear_interp_out = F.interpolate(input, scale_factor=2, mode='bilinear', align_corners=False)Skip Connections

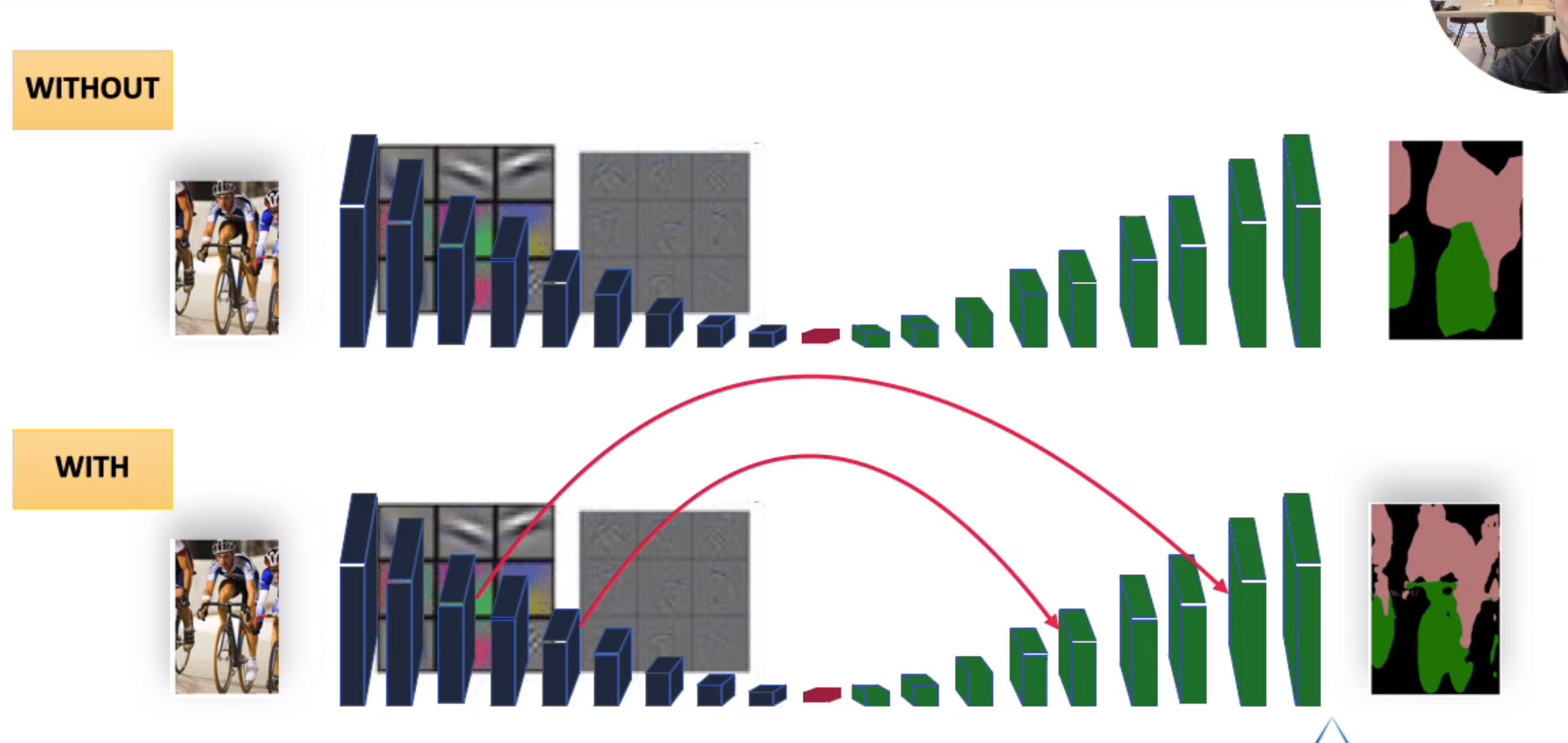

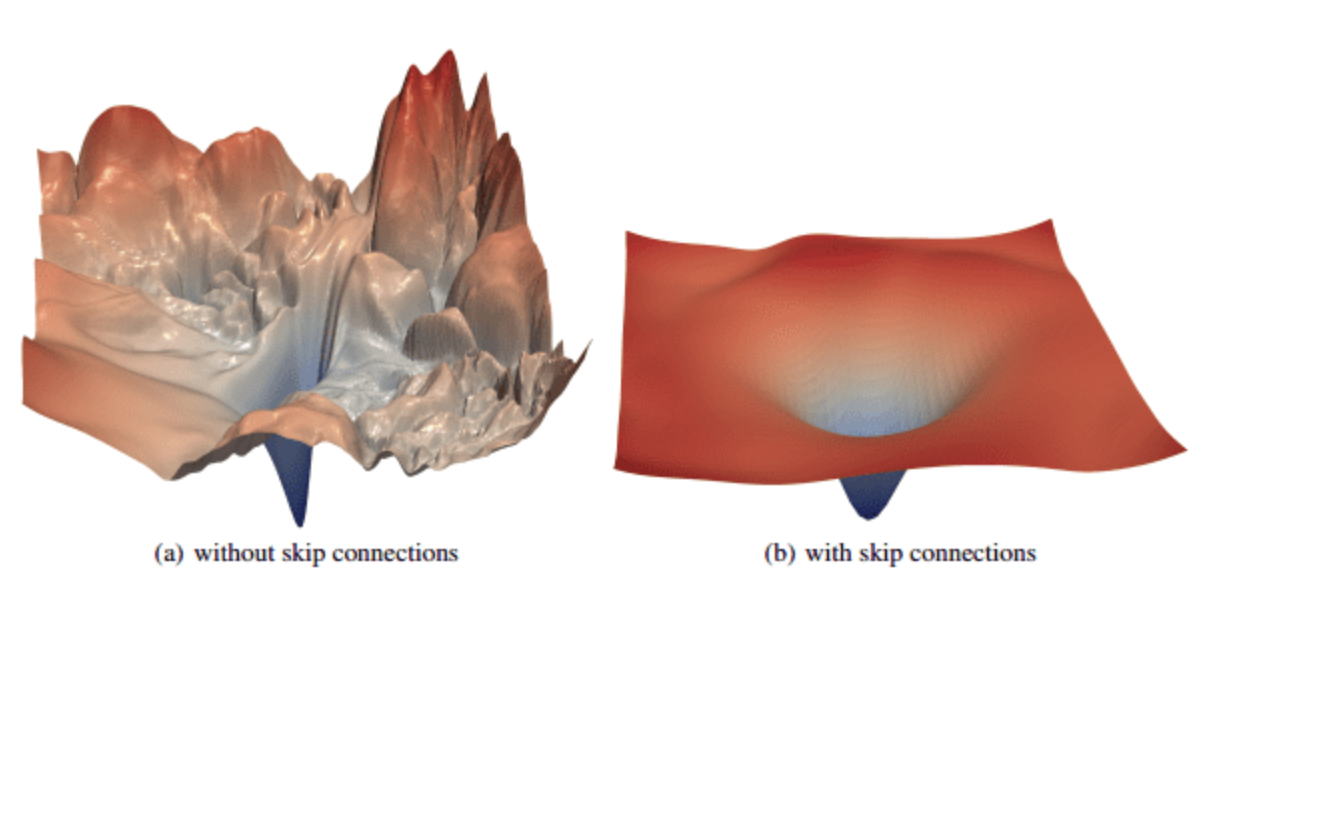

- When we are decompressing the image, it is difficult for the network to come up with original image size. Lot of information is lost in compression. To help in the process, skip connections exist between encoder and decoder.

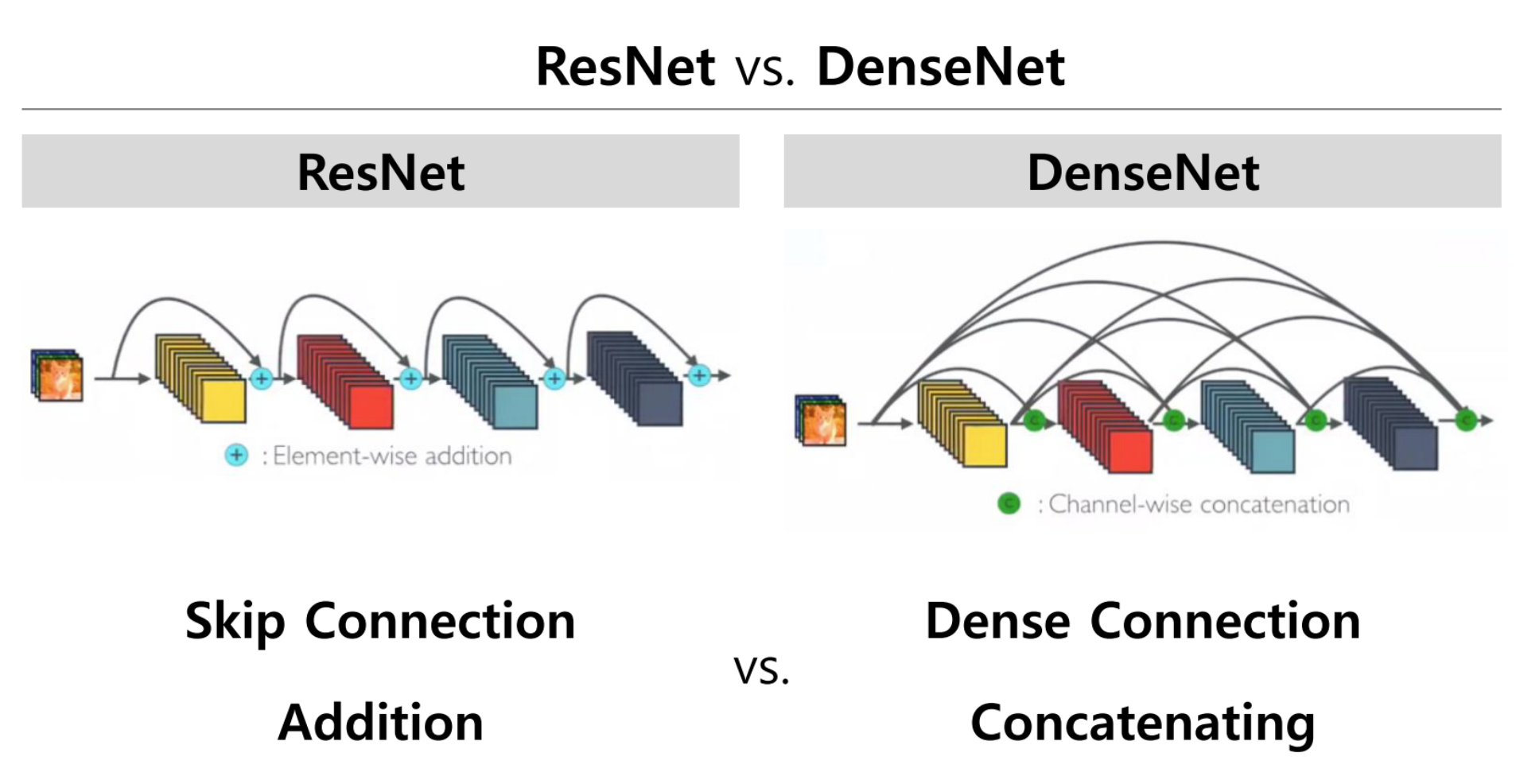

There are two types of skip connections: Additive and concatenating

ResNet is addition

DenseNet is concatenation

In segmentation, skip connections are used to pass features from the encoder path to the decoder path in order to recover spatial information lost during downsampling

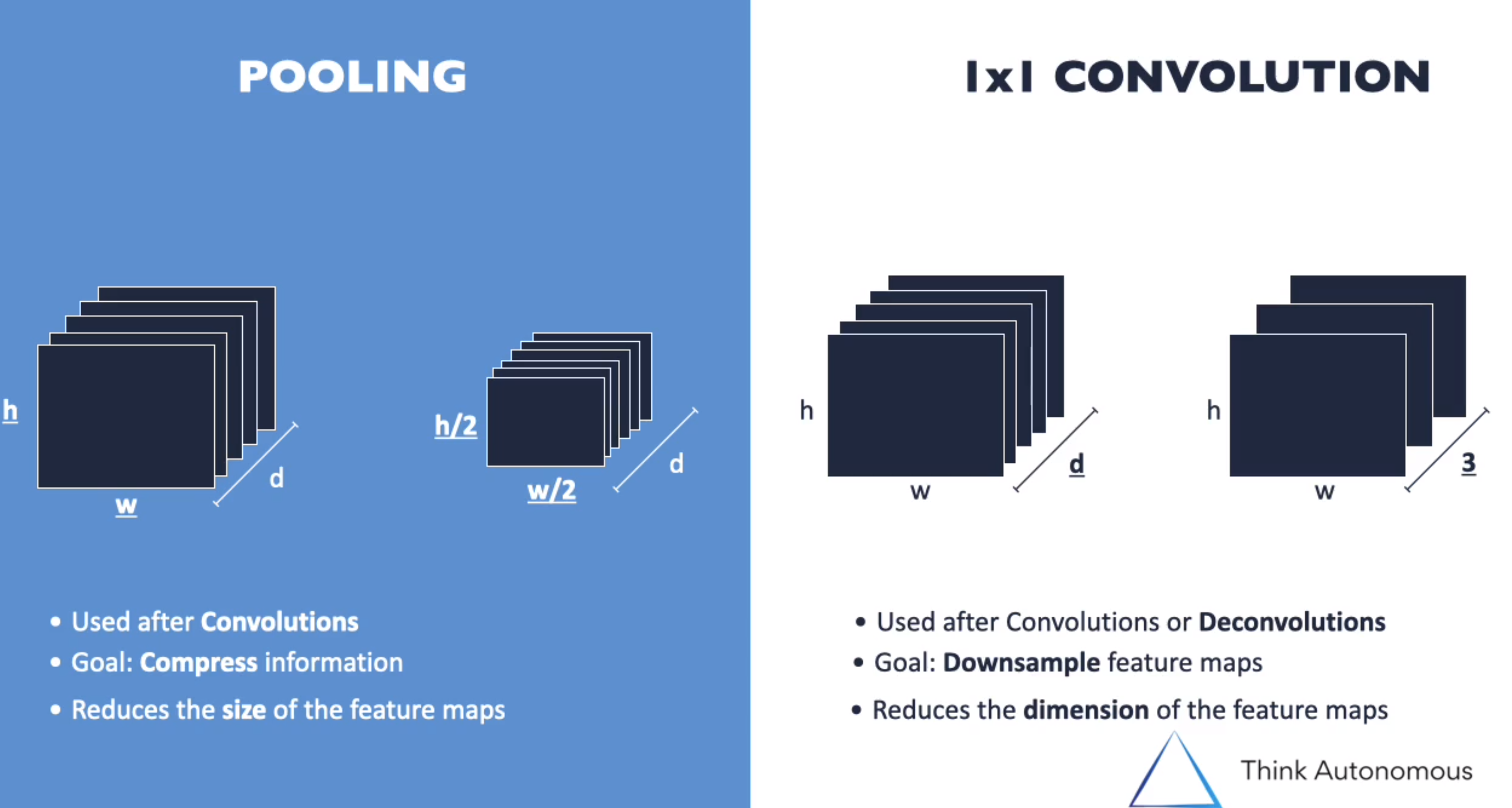

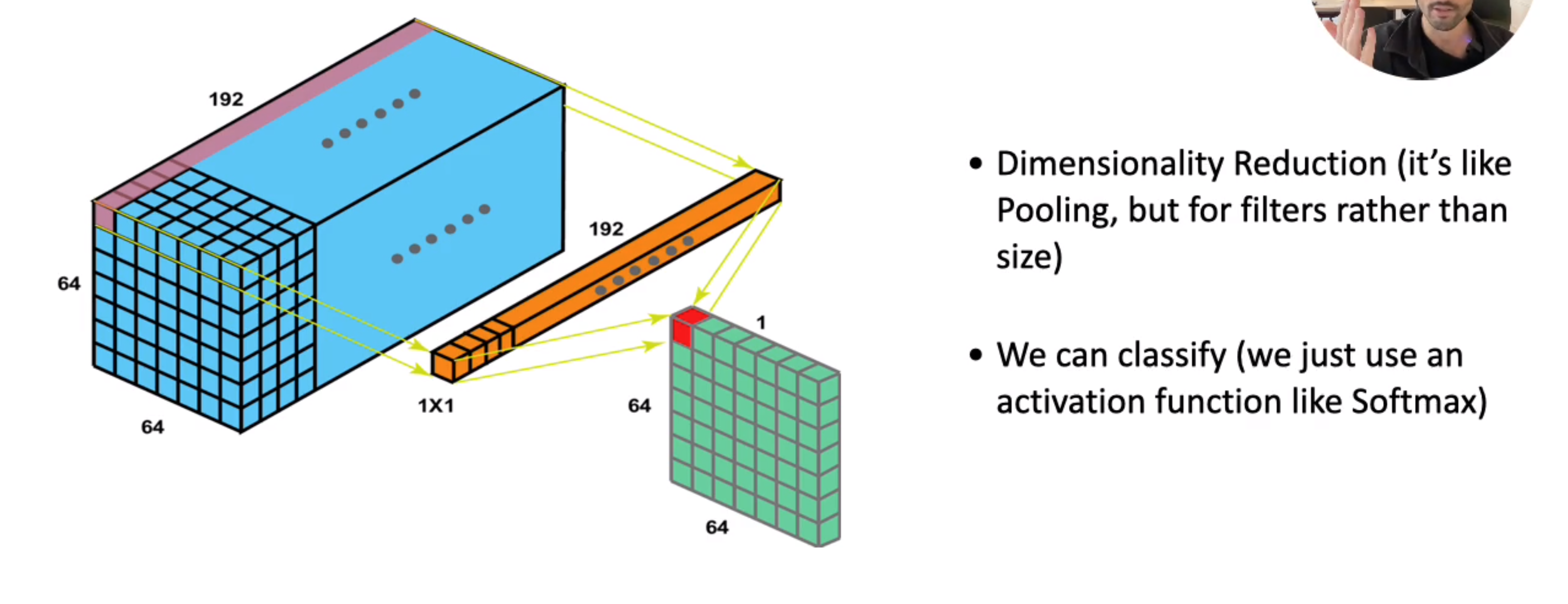

* 1x1 convolution is to reduce the number of filters (not sure how it happens)

* 1x1 convolution is to reduce the number of filters (not sure how it happens)

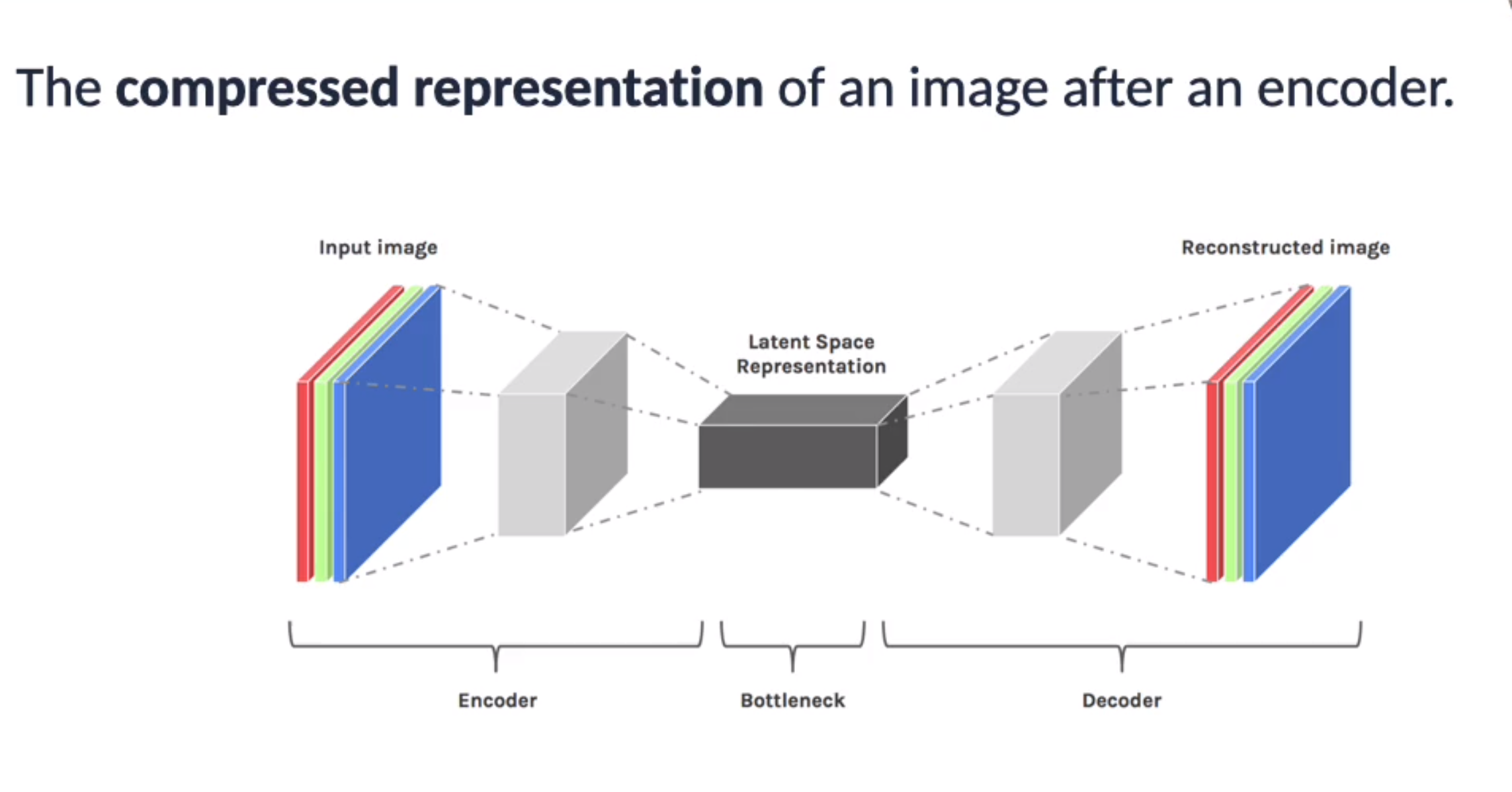

Metrics and loss functions

- The metric used is Intersection over union (i.e Jaccard similarity)

- The loss function is Focal loss (This is weighted cross entropy loss in addition to ‘gamma’ which will take care of class imbalance)

- Dice loss is also used