LightGBM

Developed and released by Microsoft. It Supports parallel and GPU learning.Below are some methods utilized by LighGBM to handle large data sets.

Using Histogram for optimal splits

- It is a histogram based gradient boosting approach. This will work reasonably well for medium-sized data sets. Histogram bin construction itself can be slow for very large number of data points or a large number of features.

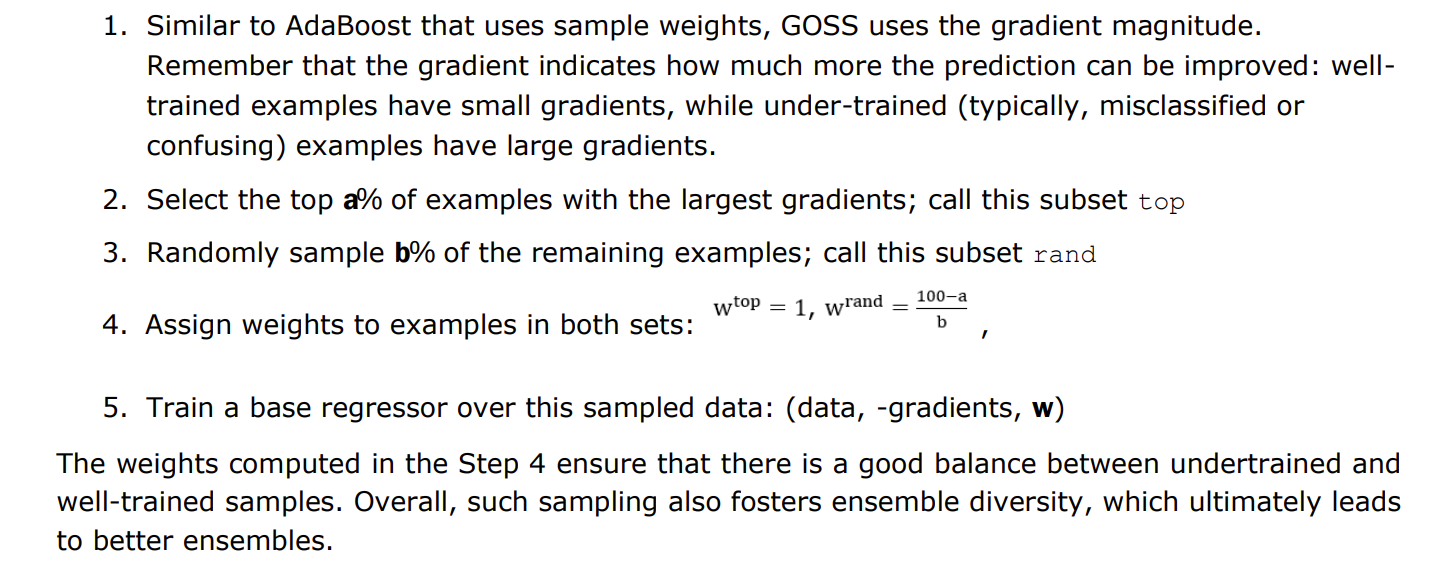

Gradient-Based One-Side Sampling (GOSS)

Data is downsampled smartly using a procedure called Gradient-Based One Side Sampling. Sampling should consists of a healthy balance between examples which are misclassified and examples which are classified correctly

LightGBM GOSS Sampling Method

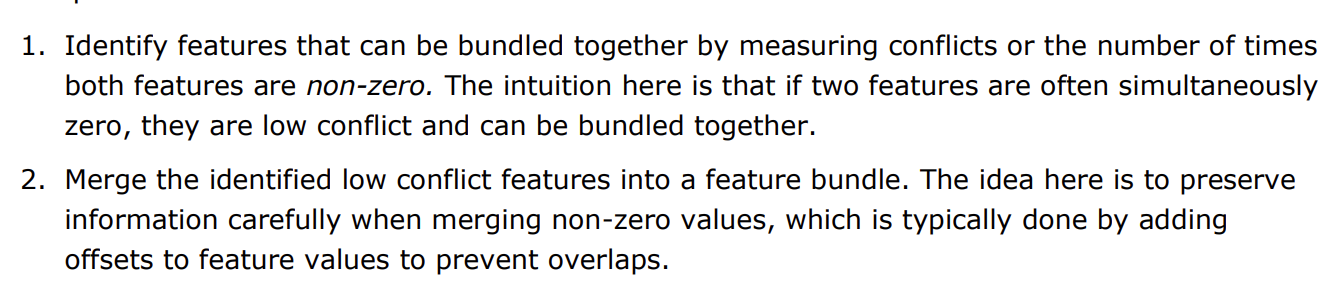

Exclusive Feature Bundling (EFB)

- Downsampling the features. This will provide improvements in training speed if the feature space is sparse and features are mutually exclusive

- EFB exploits sparsity and aims to merge mutually exclusive columns into one column to reduce the number of effective features.

Dropout meets Multiple Additive Regression Trees (DART)

- Similar to Dropout from Deep learning. DART randomly and temporarily drops base estimators from the overall ensemble during gradient fitting iterations to mitigate overfitting.

Training Modes in LightGBM

LightGBM in Practice

learning_rate,shrinkage_rateandetaare different names for learning rate. Only one should be set.- Use early_stopping to stop the training if the validation score metric does not improve over the last

early_stopping_rounds. We can specify the score metric to be used.

Read

- Dropout meets Multiple Additive Regression Trees (DART)

- DART is slow - why this is the case

- Evaluating Machine Learning Models by Alice Zheng

- How GPUs are used to train GBT models - If they are sequential