import torch

import torch.nn as nn

import torch.nn.functional as FActivation Functions

Creating our own activation functions in pytorch

class ActivationFunction(nn.Module):

def __init__(self):

super().__init__()

self.name = self.__class__.__name__

self.config = {"name":self.name}Sigmoid

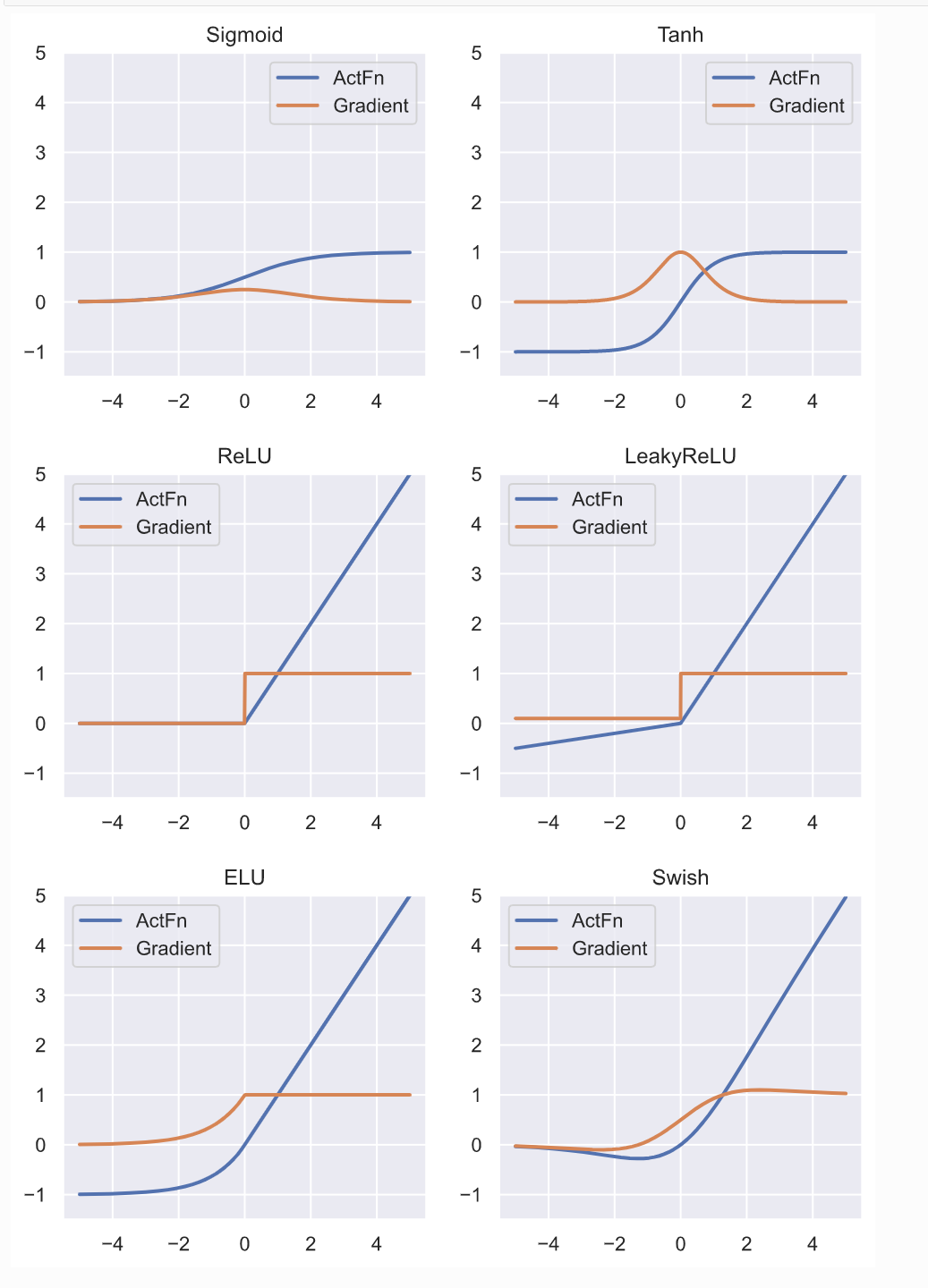

Sigmoid activation function does not work well in the inner layers. The gradients of the inner layers becomes very small and it is very difficult to update them. The gradients of the outer layers are large compared to the gradients of the inner layers. A high learning rate is suitable for the inner layers and low learning rate would suit the outer layers. Also the gradients are not centered around zero which is not good for a neural network.

class Sigmoid(ActivationFunction):

def forward(self,x):

return 1/(1+torch.exp(-x))Tanh

Tanh value range from -1 to 1. The gradients will be centered around zero which is good for the network.

class Tanh(ActivationFunction):

def forward(self,x):

x_exp, neg_x_exp = torch.exp(x), torch.exp(-x)

return (x_exp - neg_x_exp)/(x_exp + neg_x_exp)RELU

This activation function replaces the negative values with zero. The gradient of this activation function is either zero or 1 and it will look like a step function. The neurons whose activation is zero will not propogate the gradients and hence will not get updated. They are considered as dead neurons. Two ways to deal with this are to use higher learning rates or use a bias value which will avoid getting zero after activation. (Read about these methods). In practice RELU is the most commonly used activation function.

class ReLU(ActivationFunction):

def forward(self,x):

return x * (x > 0).float()

# return x.clamp(min=0)

# return max(x,0)Leaky ReLU

This function adds a small value (alpha) when the activation is negative. This will help overcome the dead neuron challenge. However we will have an additional hyperparameter to tune. The gradient instead of 0 and 1 will be alpha and 1.

class LeakyReLU(ActivationFunction):

def __init__(self, alpha=0.01):

super().__init__()

self.config['alpha'] = alpha

def forward(self,x):

return torch.where(x > 0, x, x * self.config['alpha'])ELU

The negative values are exponentially reduced.

class ELU(ActivationFunction):

def forward(self,x):

return torch.where(x > 0, x, torch.exp(x)-1)Swish

Experimentation was done on alot of datasets and activation functions. In the test, Swish was found to perform very well. For a neural network with many layers, Swish can perform better. This results after activation is not monotonic.

class Swish(ActivationFunction):

def forward(self,x):

return x * torch.sigmoid(x)